Computer Vision | Deep Learning with Tensorflow & Keras (ResNet50, GPU training)

Objective

Implement ResNet from scratch

using Tensorflow and Keras

train on CPU then switch to GPU to compare speed

If you want to jump right to using a ResNet, have a look at Keras' pre-trained models. In this repo I am implementing a 50-layer ResNet from scratch not out of need, as implementations already exist, but as a learning process.

See project repository on GitHub.

Packages used

python 3.7.9

tensorflow 2.7.0 (includes keras)

scikit-learn 0.24.1

numpy 3.7.9

pillow 8.2.0

opencv-python 4.4.0.46

GPU support

The following NVIDIA software must be installed on your system:

NVIDIA® GPU drivers —CUDA® 11.2 requires 450.80.02 or higher.

CUDA® Toolkit —TensorFlow supports CUDA® 11.2 (TensorFlow >= 2.5.0)

CUPTI ships with the CUDA® Toolkit.

cuDNN SDK 8.1.0 cuDNN versions).

Dataset

For this project I'm using the 10 Animals dataset available on Kaggle.

Implementation

Resnet50

ResNet is a family of Deep Neural Networks architectures introduced in 2015 He et al.. The original paper discussed 5 different architectures: 18-, 24-, 50-, 101- and 152-layer Neural Networks. I am implementing the 50-layer ResNet or ResNet50.

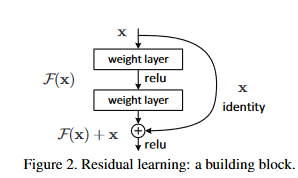

ResNets proposed a solution for the exploding/vanishing gradients problem common when building deeper and deeper NNs: taking the output of one layer and to jumping over a few layers and input this deeper into the neural network. This is called a residual block (also, identity block) and the authors illustrate this mechanism in their article like this:

The identity block can be used when the input x has the same dimension (width and height) as the output of the layer where we are feedforwarding x, othersize the addition wouldn't be possible. When this condition is not met, I use a convolution block like in the image below:

The only difference between the identity block and the convolution block is that the second has another convolution layer (plus a batch normalization) on the skip conection path. The convolution layer on the skip connection path has the purpose of resizing x so that its dimension matches the output and thus I can add those two together.

Following the ResNet50 architecture described in He et al. 2015, the architecture I'm implementing in this repo has the structure illustrated below:

GPU versus CPU training

The easiest way to see the diffence in training duration is to open the notebook in this repository, resnet-keras-code-from-scratch-train-on-gpu.ipynb, on Kaggle and follow the instructions for ativating GPU contained in the notebook. This is what I did in my case, as I don't have a separate GPU on my laptop.

To set up GPU support on a physical machine, follow these instructions.

Project contents

To process the data for obtaining squared images of the pre-defined size (as per model architecture definition), run the make_dataset.py script

from the src/data folder

To train a model, run the example_train.py script:

to see available parameters:

and select your preferred training options.

To make predictions using a pre-trained model, use the example_predict.py script:

and choose the desired setting from:

Last updated